Power

Power analysis is currently in alpha (version 2.9+ required). To enable power analysis, go to your organizational settings, and toggle "Enable Power Calculator" in "Experiment Settings." Currently GrowthBook offers only frequentist power analysis. Bayesian power analysis is coming soon.

What is power analysis?

Power analysis helps you estimate required experimental duration. Power is the probability of observing a statistically significant result, given your feature has some effect on your metric.

When should I run power analysis?

You should run power analysis before your experiment starts, to determine how long you should run your experiment. The longer the experiment runs, the more users are in the experiment (i.e., your sample size increases). Increasing your sample size lowers the uncertainty around your estimate, which makes it likelier you achieve a statistically significant result.

What is a minimum detectable effect, and how do I interpret it?

Recall that your relative effect size (which we often refer to as simply the effect size), is the percentage improvement in your metric caused by your feature. For example, suppose that average revenue per customer under control is $100, while under treatment you expect that it will be $102. This corresponds to a ($102-$100)/$100 = 2% effect size. Effect size is often referred to as lift.

Given the sample variance of your metric and the sample size, the minimum detectable effect (MDE) is the smallest effect size for which your power will be at least 80%.

GrowthBook includes both power and MDE results to ensure that customers comfortable with either tool can use them to make decisions. The MDE can be thought of as the smallest effect that you will be able to detect most of the time in your experiment. We want to be able to detect subtle changes in metrics, so smaller MDEs are better.

For example, suppose your MDE is 2%. If you feel like your feature could drive a 2% improvement, then your experiment is high-powered. If you feel like your feature will probably only drive something like .5% improvement (which can still be huge!), then you need to add users to detect this effect.

How do I run a power analysis?

- From the GrowthBook home page, click "Experiments" on the left tab. In the top right, click "+ Power Calculation."

- Select “New Calculation”.

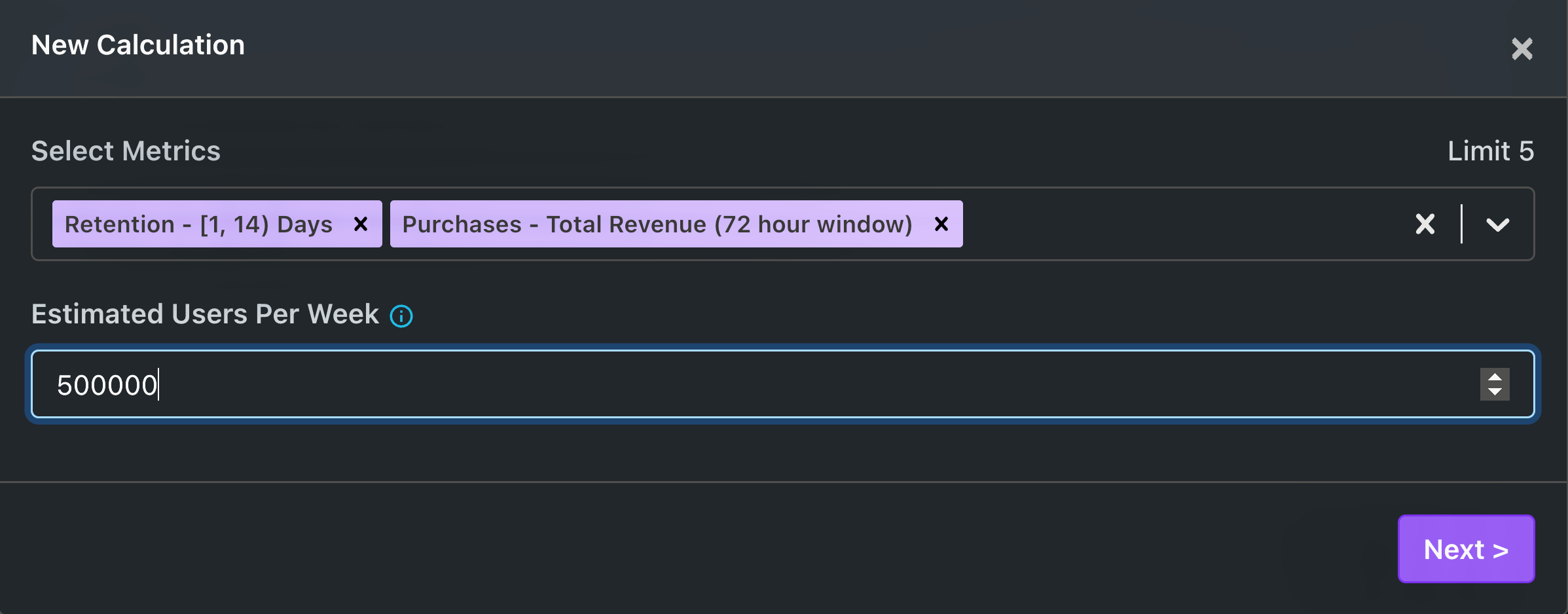

- On the first page you:

- Select your metrics (maximum of 5). Currently binomial, count, duration, and revenue metrics are supported, while ratio and quantile metrics are unsupported.

- Select your "Estimated users per week." This is the average number of new users your experiment will add each week. See FAQ below for a couple of simple estimation approaches.

- click "Next".

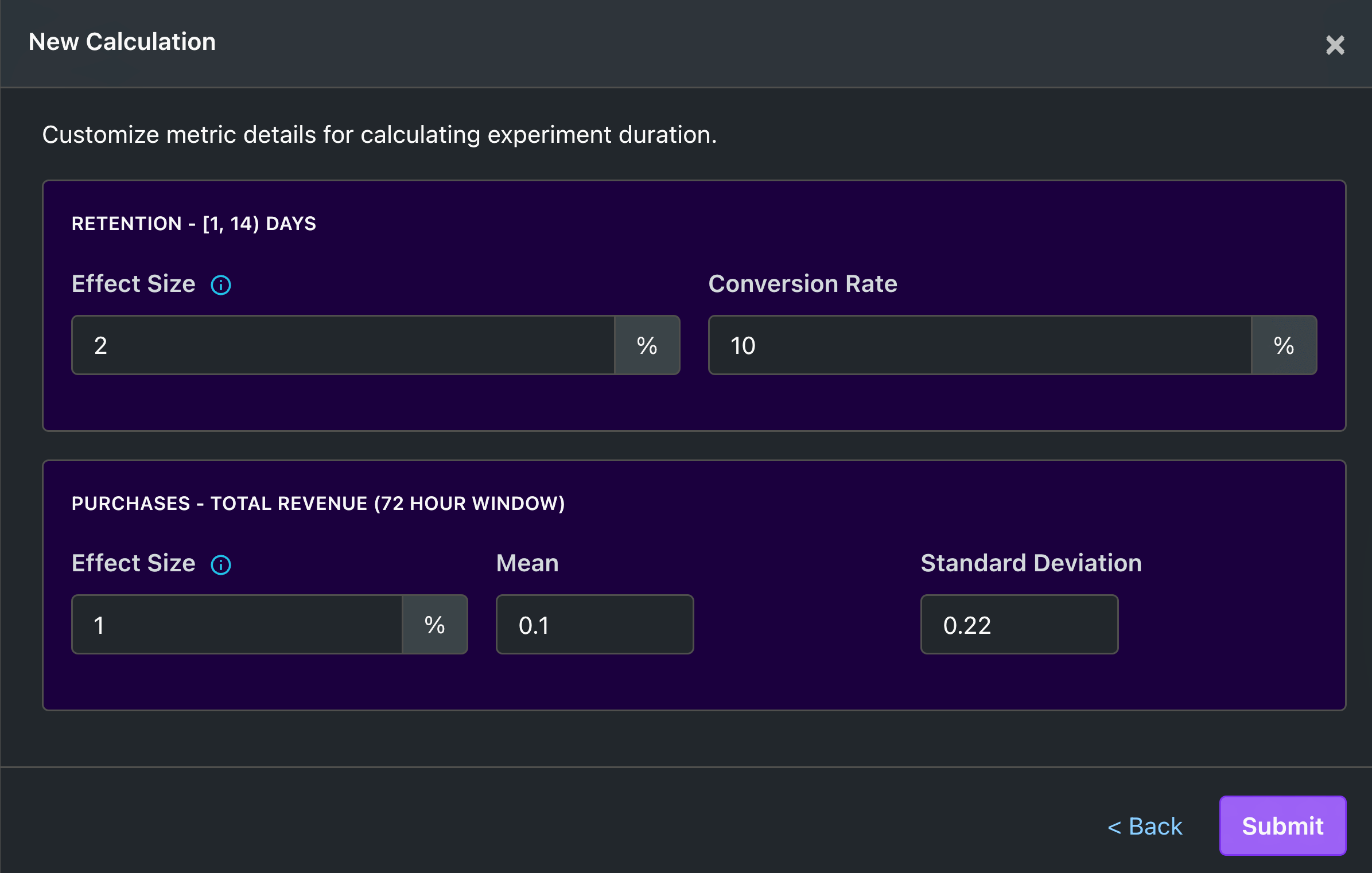

- On the second page you:

- enter the "Effect Size" for each metric. Effect size is the percentage improvement in your metric (i.e., the lift) you anticipate your feature will cause. Inputting your effect size can require care - see here.

- for binomial metrics, enter the conversion rate.

- for other metrics, enter the metric mean and metric standard deviation. These means and standard deviations occur across users in your experimental population.

- click "Next".

- Now you have results! Please see the results interpretation here.

- By clicking "Edit" in the "Analysis Settings" box, you can toggle sequential testing on and off to compare power. Enabling sequential testing decreases power.

- You can alter the number of variations in your experiment. Increased variations result in smaller sample sizes per variation, resulting in lower power.

- If you want to modify your inputs, click the "Edit" button next to "New Calculation" in the top right corner.

How do I interpret power analysis results?

In this section we run through an example power analysis. After starting power analysis, you will need to select metrics and enter estimated users per week.

In our example, we choose a binomial metric (Retention - [1, 14) Days) and a revenue metric (Purchases - Total Revenue (72 hour window)). We refer to these metrics as "retention" and "revenue" respectively going forward. We estimate that 500,000 new users per week visit our website.

You then input your effect sizes, which are the best guesses for your expected metric improvements due to your feature.

We provide guidance for effect size selection here. For our feature we anticpate a 2% improvement in retention. For retention, the conversion rate is 10%. This 10% number should come from an offline query that measures your conversion rate on your experimental population. We expect a 1% improvement in revenue, which has a mean of $0.10 and a standard deviation of $0.22 (as with conversion rate, the mean and standard deviation come from an offline query that you run on your population). We then submit our data.

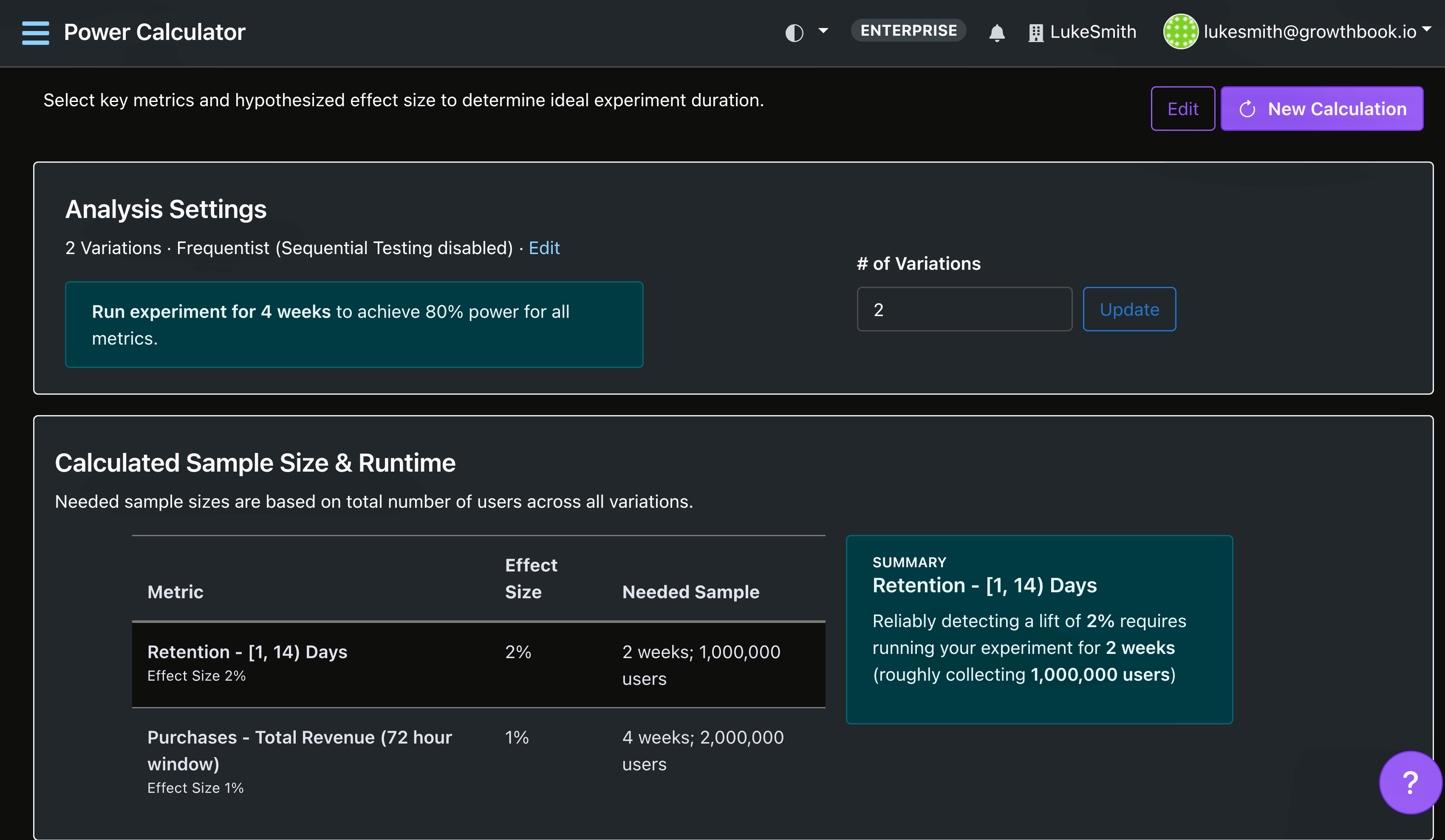

Now we can see the results!

At the top of the page is a box called Analysis Settings. The summary here says, "Run experiment for 4 weeks to achieve 80% power for all metrics." This is the most important piece of information from power analysis. If running your experiment for 4 weeks is compatible with your business constraints, costs, and rollout timeframe, then you do not need to dive into the numbers below this statement. If you want to rerun power results with number of variations greater than 2, then click "# of Variations" and then click "Update". If you want to toggle on or off "Sequential Testing", then press the "Edit" button and select the appropriate option. Enabling sequential testing reduces power.

Below "Analysis Settings" is "Calculated Sample Size and Runtime", which contains the number of weeks (or equivalently the number of users) needed to run your experiment to achieve 80% power by metric. Clicking on a row in the table causes the information in the box to the right to change. We expect 80% power for retention if we run the experiment for 2 weeks. For revenue, we need to run the experiment for 4 weeks to achieve at least 80% power.

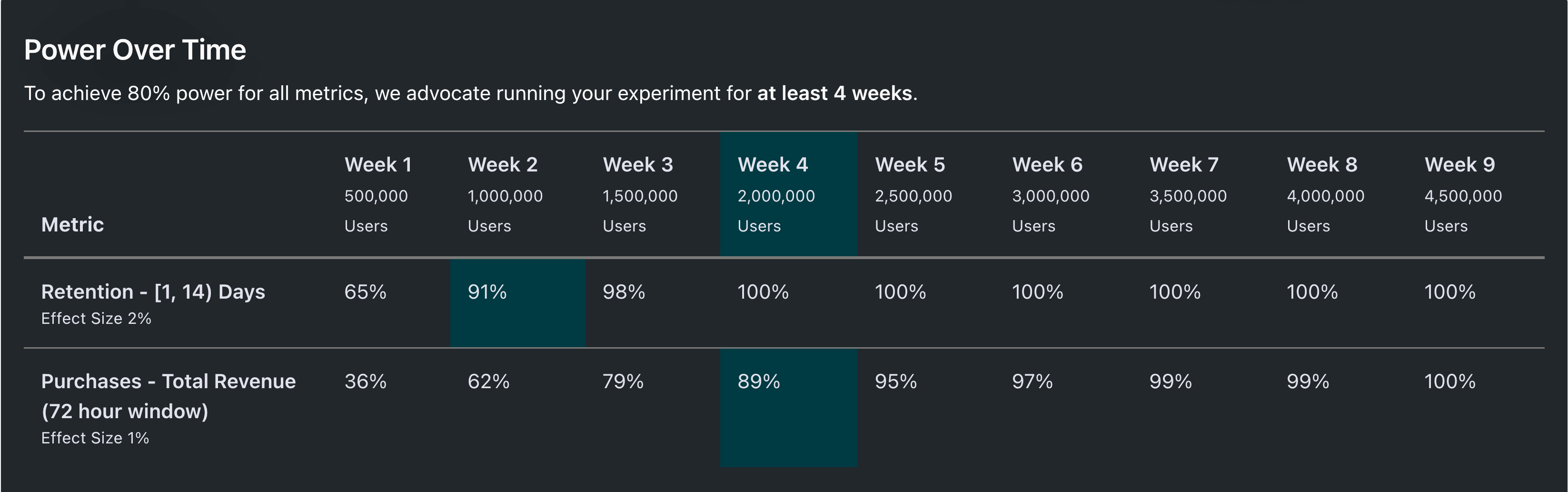

Beneath "Calculated Sample Size and Runtime" is "Power Over Time", which contains power estimates by metric.

The columns in Power Over Time correspond to different weeks. For example, in the first week power for retention is 65%. The highlighted column in week 4 is the first week where at least 80% power is achieved for all metrics. As expected, power increases over time, as new users are added to the experiment.

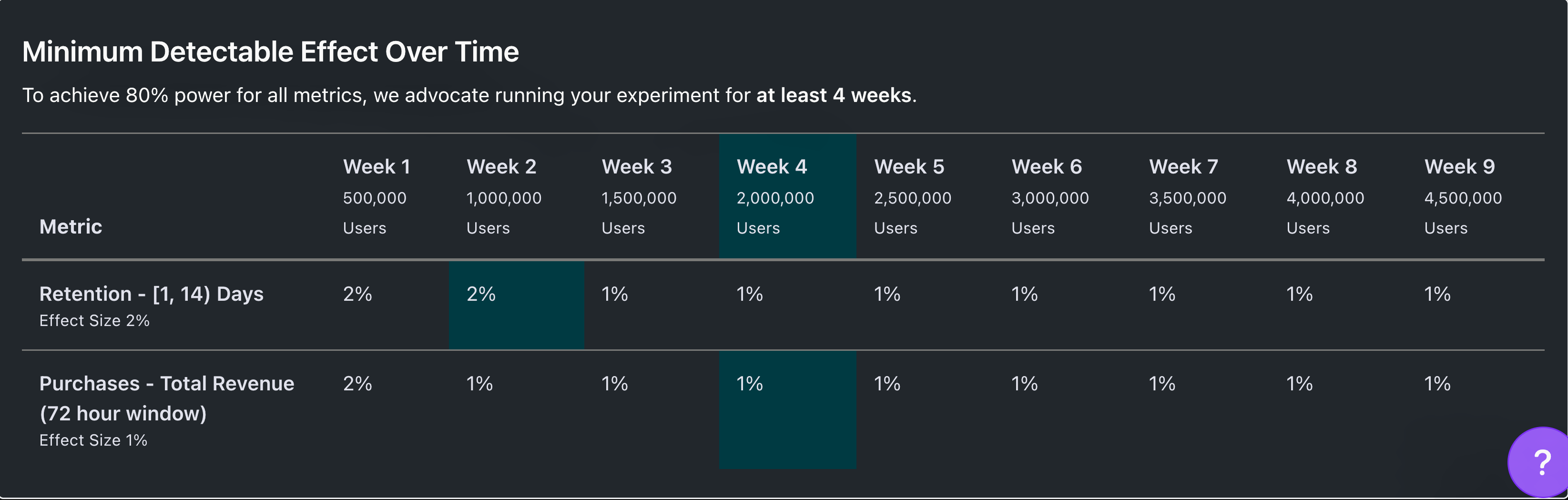

Beneath Power Over Time is Minimum Detectable Effect Over Time.

Minimum Detectable Effect Over Time is structured the same as Power Over Time, except it contains MDEs rather than power estimates. The Week 1 revenue MDE is 2%. This means that if your true lift is 2%, after 1 week you will have at least 80% chance of observing a statistically significant result. As expected, MDEs decrease over time, as new users are added to the experiment.

If you see N/A in your MDE results, this means that you need to increase your number of weekly users, as the MDE calculation failed.

It can be helpful to see power estimates at different effect sizes, different estimates of weekly users, etc. To modify your inputs, click the "Edit" button next to "New Calculation" in the top right corner.

How should I pick my effect size?

Selecting your effect size for power analysis requires careful thought. Your effect size is your anticipated metric lift due to your feature. Obviously you do not have complete information about the true lift, otherwise you would not be running the experiment!

We advocate running power analysis for multiple effect sizes. The following questions may elicit helpful effect sizes:

- What is your best guess for the potential improvement due to your feature? Are there similar historical experiments, or pilot studies, and if so, what were their lifts?

- Suppose your feature is amazing - what do you think the lift would be?

- Suppose your feature impact is smaller than you think - how small could it be?

Ideally your experiment has high power (see here) across a range of effect sizes.

What is a high-powered experiment?

In clinical trials, the standard is 80%. This means that if you were to run your clinical trial 100 times with different patients and different randomizations each time, then you would observe statistically significant results in at least roughly 80 of those trials. When calculating MDEs, we use this default of 80%.

That being said, running an experiment with less than 80% power can still help your business. The purpose of an experiment is to learn about your business, not simply to roll out features that achieve statistically significant improvement. The biggest cost to running low-powered experiments is that your results will be noisy. This usually leads to ambiguity in the rollout decision.

FAQ

Frequently asked questions:

- How do I pick the number for Estimated Users Per Week? If you know your number of daily users, you can multiply that by 7. If traffic varies by day of the week, you may want to do something like (5 average weekday traffic + 2 average weekend traffic) / 7.

- How can I increase my experimental power? You can increase experimental power by increasing the number of daily users, perhaps by either expanding your population to new segments, or by including a larger percentage of user traffic in your experiment. Similarly, if you have more than 2 variations, reducing the number of variations increases power.

- What if my experiment is low-powered? Should I still run it? The biggest cost to running a low-powered experiment is that your results will probably be noisy, and you will face ambiguity in your rollout/rollback decision. That being said, you will probably still have learnings from your experiment.

- What does "N/A" mean for my MDE result? It means there is no solution for the MDE, given the current number of weekly users, control mean, and control variance. Increase your number of weekly users or reduce your number of treatment variations.

GrowthBook implementation

Below we describe technical details of our implementation. First we start with the definition of power.

Define:

- the false positive rate as (GrowthBook default is ).

- the critical values and where is the inverse CDF of the standard normal distribution.

- the true relative treatment effect as , its estimate as and its estimated standard error as . Note that as the sample size increases, s decreases by a factor of .

We make the following assumptions:

- equal sample sizes across control and treatment variations;

- equal variance across control and treatment variations;

- observations across users are independent and identically distributed; and

- all metrics have finite variance.

- you are running a two-sample t-test. If in practice you use CUPED, your power will be higher. Use CUPED!

For a 1-sided test, the probability of a statistically significant result (i.e., the power) is

.

For a 2-sided test (all GrowthBook tests are 2-sided), the power is composed of the probability of a statistically significant positive result and a statistically significant negative result. Using the same algebra as above (except using for the critical value), the probability of a statistically significant positive result is

. The probability of a statistically significant negative result is .

For a 2-sided test, the power equals

.

The MDE is the smallest for which nominal power (i.e., 80%) is achieved. For a 1-sided test there is a closed form solution for the MDE. Solving the 1-sided power equation for produces .

In the 2-sided case there is no closed form solution, so we instead invert the equation below, which ignores the neglible term , and produces power estimates very close to 0.8:

One subtlety with finding MDEs for relative treatment effects is that the uncertainty of the estimate depends upon the mean.

So when inverting the power formula above to find the minimum that produces at least 80% power, the uncertainty term changes as changes.

We solve for the equation below, where we make explicit the dependence of on :

Define as the population mean of variation and as the population variance.

For variation analogously define ; recall that we assume equal variance across treatment arms.

Define as the per-variation sample size.

Define the sample counterparts as (, , , and ).

Recall that our lift is defined as and the variance of the sample lift is

Define the constant . We solve for in:

Rearranging terms shows that

This is quadratic in and has solution

The discriminant reduces to

so a solution for exists if and only if

Similarly, the MDE returned can be negative if the denominator is negative, which is nonsensical.

We return cases only where the denominator is positive, which occurs if and only if:

This condition is stricter than the condition guaranteeing existence of a solution.

Therefore, there will be some combinations of where the MDE does not exist for a given . If and , then . Therefore, a rule of thumb is that needs to be roughly 9 times larger than the ratio of the variance to the squared mean to return an MDE. In these cases, needs to be increased.

To estimate power under sequential testing, we adjust the variance term to account for sequential testing, and then input this adjusted variance into our power formula. We assume that you look at the data only once, so our power estimate below is a lower bound for the actual power under sequential testing. Otherwise we would have to make assumptions about the temporal correlation of the data generating process.

In sequential testing we construct confidence intervals as

where

and is a tuning parameter. This approach relies upon asymptotic normality. For power analysis we rewrite the confidence interval as

where

.

We use power analysis described above, except we substitute for .